Hao AiI received my Master’s degree from Beihang University (2022–2025), under the supervision of Prof. Lu Sheng. My research interests lie in image and video generation, with a dedicated focus on developing controllable, high-fidelity, and real-time generative frameworks. During my internship at Xiaohongshu's InstantX Team (2023–2024), I spearheaded the deployment of AIGC solutions and conducted research into image stylization and super-resolution. I also maintained a close collaboration with Prof. Gen Luo from Shanghai AI Laboratory, focusing on the acceleration of Multimodal Large Language Models (MLLMs). Currently, I am an AIGC Algorithm Engineer at CreateAI, where I continue to push the boundaries of generative modeling and its practical applications. |

|

ResearchI am interested in research on diffusion models, image and video generation, and multimodal large language models. |

|

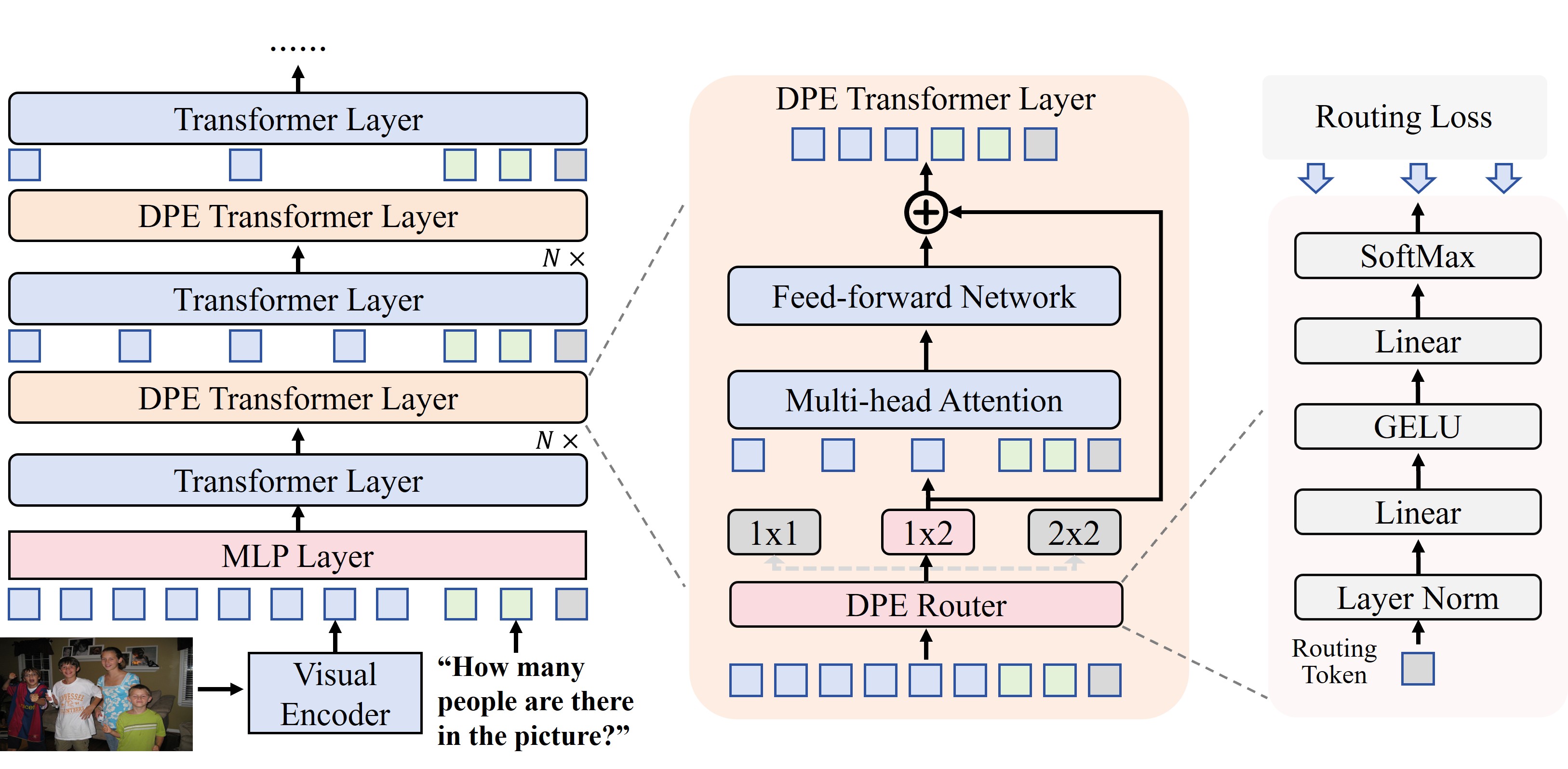

Dynamic Pyramid Network for Efficient Multimodal Large Language ModelHao Ai, Kunyi Wang, Zezhou Wang, Hao Lu, Jin Tian, Yaxin Luo, Peng Xing, Jen-Yuan Huang, Huaxia Li, Gen luo arXiv, 2025 arxiv / code We designed a specialized expert module that dynamically compresses visual features within the MLLM layers, allowing for seamless integration into the existing MLLM training pipeline with zero additional overhead. When evaluated on LLaVA, our approach achieved a 56% reduction in FLOPs alongside a 0.74% performance improvement. |

|

InstantIR: Blind Image Restoration with Instant Generative ReferenceJen-Yuan Huang, Haofan Wang, Qixun Wang, Xu Bai, Hao Ai, Peng Xing, Jen-Tse Huang arXiv, 2024 arxiv / demo / code In this work, we implement an auto-regressive image restoration process utilizing pre-trained text-to-image diffusion model. At each denoising step, we first generate a restoration reference from current diffusion latent, which is then used to condition the sub-sequent diffusion step. |

|

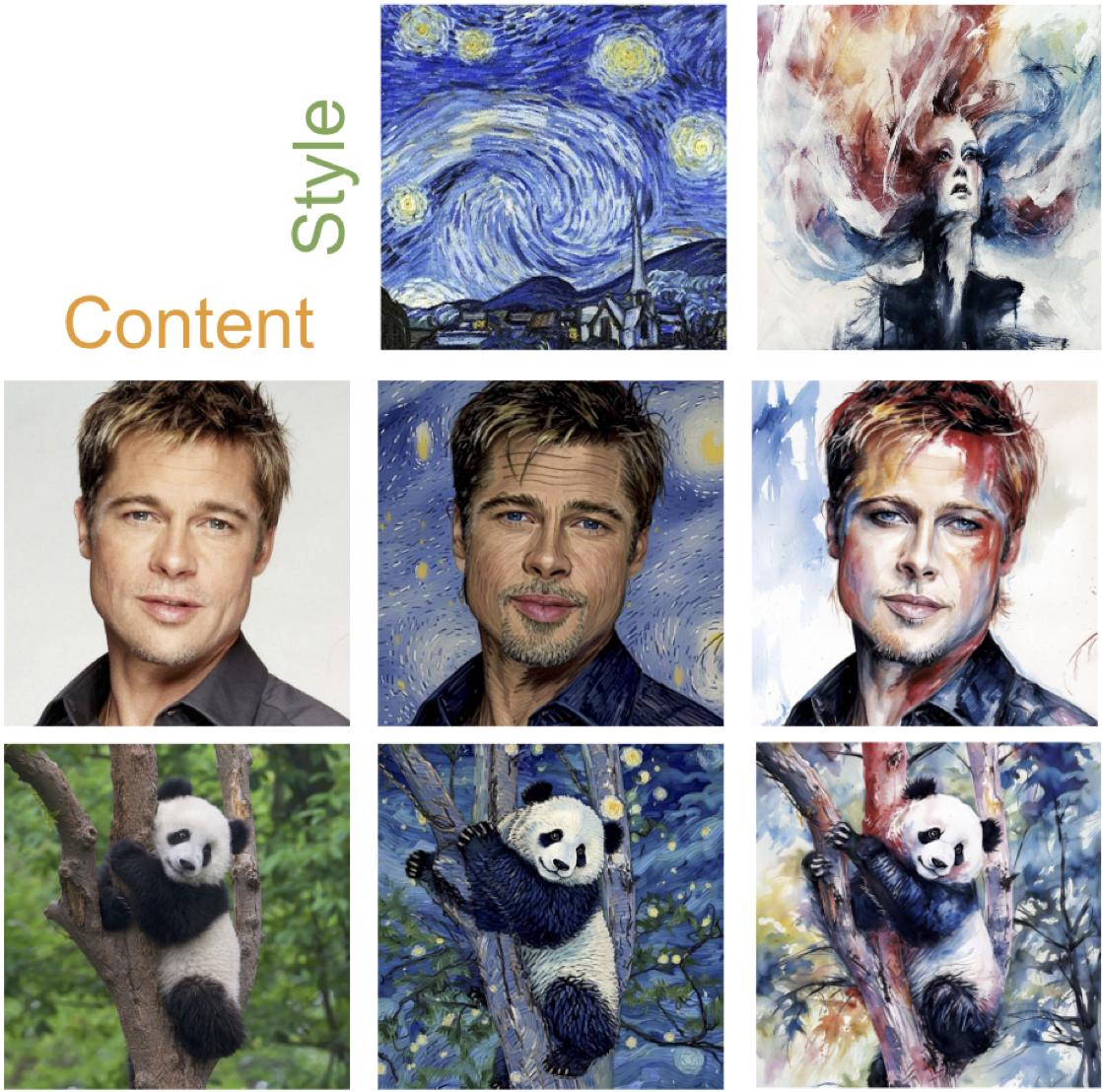

CSGO: Content-Style Composition in Text-to-Image GenerationPeng Xing, Haofan Wang, Yanpeng Sun, Qixun Wang, Xu Bai, Hao Ai, Jen-Yuan Huang, Zechao Li NeurIPS, 2024 arxiv / demo / website / code In this work, we devlop an image stylization model named CSGO, which transfers the style presented by an input reference image to a source image. To enable end-to-end training, we introduce an automatic construction pipeline and IMAGStyle, first large-scale style transfer dataset with 210K {content;style;target}-triplet. |

|

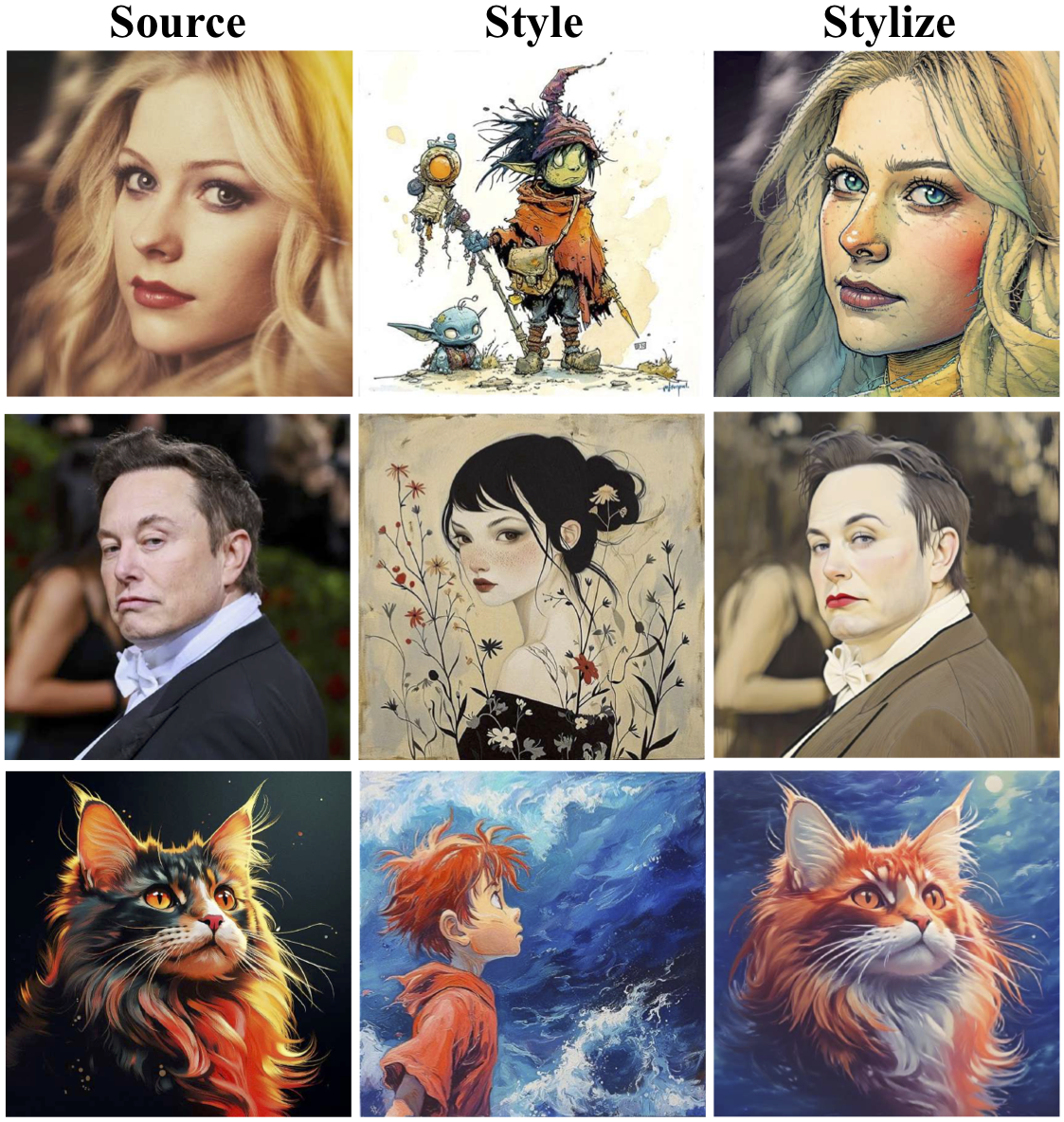

InstantStyle-Plus: Style Transfer with Content-Preserving in Text-to-Image GenerationHaofan Wang, Peng Xing, Jen-Yuan Huang, Hao Ai, Qixun Wang, Xu Bai arXiv, 2024 arxiv / demo / website / code In this paper, we explore natural style transfer while maintaining content integrity. Through analyzing different components of the Stable Diffusion UNet, we identify layers that specialize in processing style and content. Furthermore, we introduce a style discriminator to enhance the stylization of the output. |

|

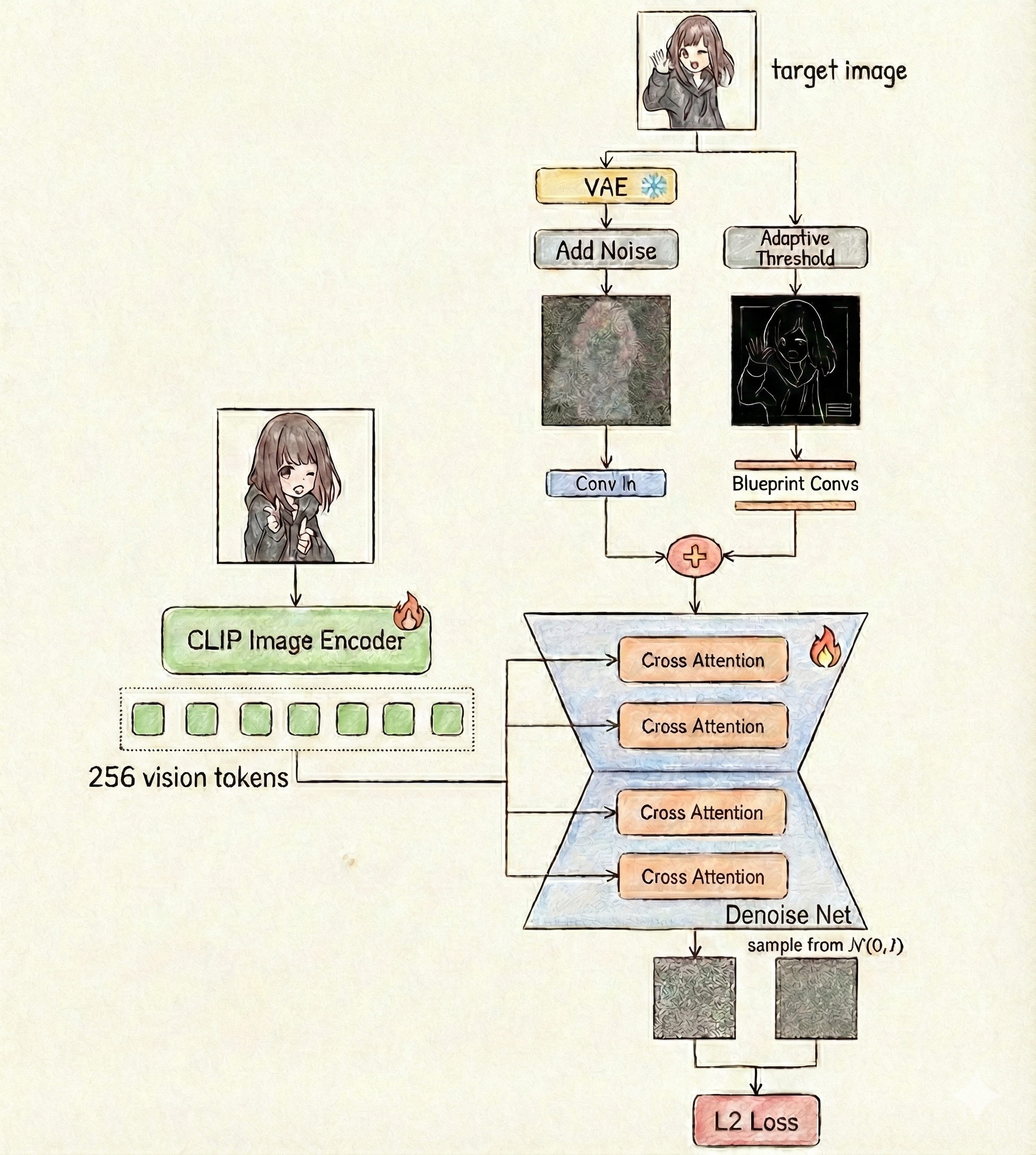

Stable Diffusion Reference Only: Multi-image Guided Diffusion model enables Controllable Coloring of Line ArtHao Ai, Lu Sheng arXiv, 2023 arxiv / demo / code We constructed a large-scale anime character dataset (comprising 1M character pairs) and modified the Stable Diffusion 2.1 architecture to enable controllable line art colorization. It can even support coloring based on different anime characters. |

Other ProjectsThese are some tiny projects conducted during my research that were not formalized into published papers. |

|

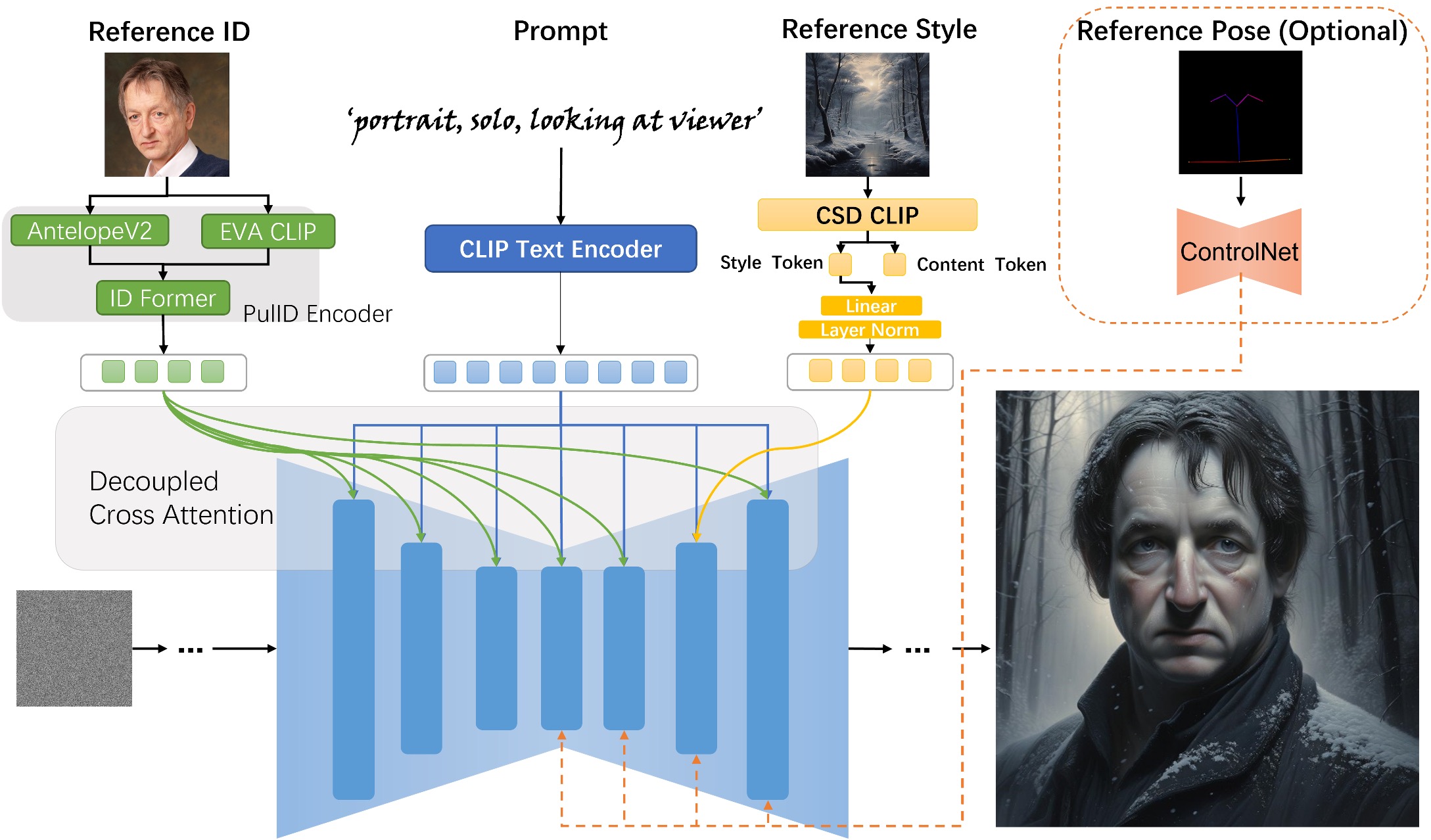

IP-Adapter-Art2024-11-01 code / demo We investigated the performance of various image encoder architectures within the IP-Adapter framework and released the CSD-based IP-Adapter weights. This model focuses specifically on style transfer tasks by leveraging a customized stylistic image dataset. Furthermore, we integrated the implementations of Instant-Style and PulID to achieve stylized portrait generation. |